What Is ChatGPT?

ChatGPT is an AI chatbot system released by OpenAI in November to demonstrate and test the capabilities of a large, powerful AI system. You can ask it as many questions as you want and it will usually respond with useful information.

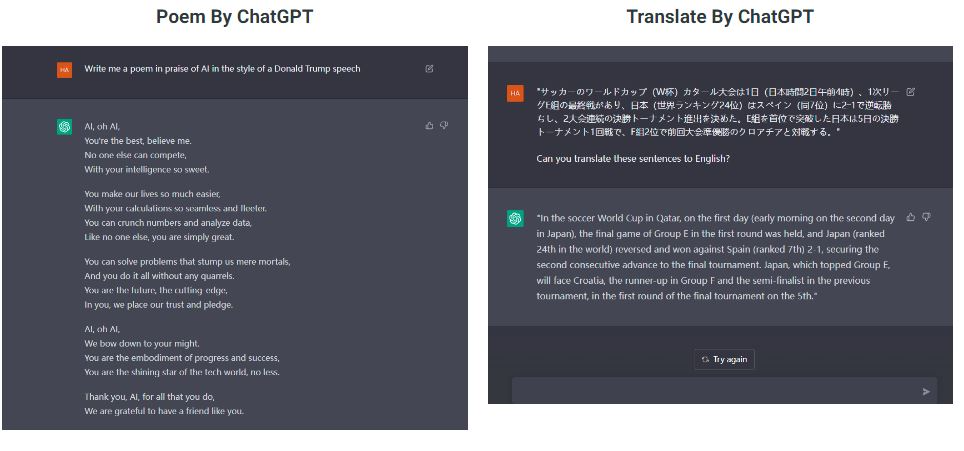

You can, for example, ask it encyclopaedia questions such as “Explaining Newton’s laws of motion.” “Write me a poem,” you can say, and when it does, you can say, “Now make it more exciting.” You request that it create a computer programme that will show you all of the different ways you can arrange the letters of a word.

The problem is that ChatGPT doesn’t really know anything. It is an AI that has been trained to identify patterns in significant amounts of text that has been taken from the internet and then further trained with the help of humans to provide more helpful, better dialogue. As OpenAI cautions, the answers you receive can seem logical and even authoritative, but they could also be completely incorrect.

For years, businesses looking for ways to assist customers in finding what they need and AI researchers attempting to crack the Turing Test have been interested in chatbots. That is the well-known “Imitation Game,” which computer scientist Alan Turing suggested as a test of intellect in 1950: Can a person distinguish between a conversation with a computer and one with another person?

However, chatbots come with a lot of baggage, as companies have tried, with limited success, to replace humans in customer service. .

Who built ChatGPT?

The most recent language model, Chat GPT, is an Open AI (Altman, Musk, and other Silicon Valley investors created an artificial intelligence research non-profit organization in 2015) that was created and trained exclusively for conversational engagements. Sam Altman’s OpenAI released ChatGPT on November 30; it has since taken the tech industry and the internet by storm.

It’s made splashes before, first with GPT-3, which can generate text that can sound like a human wrote it, and then DALL-E, which creates what’s now called “generative art” based on text prompts you type in.

GPT-3, and the GPT 3.5 update on which ChatGPT is based, are examples of AI technology called large language models. They’re trained to create text based on what they’ve seen, and they can be trained automatically — typically with huge quantities of computer power over a period of weeks. For example, the training process can find a random paragraph of text, delete a few words, ask the AI to fill in the blanks, compare the result to the original and then reward the AI system for coming as close as possible. Repeating over and over can lead to a sophisticated ability to generate text.

ChatGPT Examples

The Application of ChatGPT

ChatGPT can be used for a wide range of natural language processing tasks. Some of the potential applications of ChatGPT include:

- Text generation: ChatGPT can be used to generate human-like text responses to prompts, which makes it useful for creating chatbots for customer service, generating responses to questions in online forums, or even creating personalized content for social media posts.

- Language translation: ChatGPT can also be used for language translation tasks. By providing the model with a text prompt in one language and specifying the target language, the model can generate accurate and fluent translations of the text.

- Text summarization: ChatGPT can be used to generate summaries of long documents or articles. This can be useful for quickly getting an overview of a text without having to read the entire document.

- Sentiment analysis: ChatGPT can be used to analyze the sentiment of a given text. This can be useful for understanding the overall tone and emotion of a piece of writing, or for detecting the sentiment of customer feedback in order to improve customer satisfaction.

Why is everyone obsessed with ChatGPT, a mind-blowing AI chatbot?

There’s a new AI bot in town: ChatGPT, and even if you’re not into artificial intelligence, you’d better pay attention.

The tool, from a power player in artificial intelligence called OpenAI, lets you type questions using natural language, to which the chatbot gives conversational, if somewhat stilted, answers. The bot remembers the thread of your dialogue, using previous questions and answers to inform its next responses. Its answers are derived from huge volumes of information on the internet.

It’s a big deal. The tool seems pretty knowledgeable in areas where there’s good training data for it to learn from. It’s not omniscient or smart enough to replace all humans yet, but it can be creative, and its answers can sound downright authoritative. A few days after its launch, more than a million people were trying out ChatGPT.

But its creator, the for-profit research lab called OpenAI, warns that ChatGPT “may occasionally generate incorrect or misleading information,” so be careful. Here’s a look at why ChatGPT is important and what’s going on with it.

Is ChatGPT free?

Yes, for now at least. OpenAI CEO Sam Altman warned on Sunday, “We will have to monetize it somehow at some point; the compute costs are eye-watering.” OpenAI charges for DALL-E art once you exceed a basic free level of usage.

But OpenAI seems to have found some customers, likely for its GPT tools. It’s told potential investors OpenAI expects $200 million in revenue in 2023 and $1 billion in 2024, according to Reuters.

What are the limits of ChatGPT?

As OpenAI emphasizes, ChatGPT can give you wrong answers. Sometimes, helpfully, it’ll specifically warn you of its own shortcomings. For example, when I asked it who wrote the phrase “the squirming facts exceed the squamous mind,” ChatGPT replied, “I’m sorry, but I am not able to browse the internet or access any external information beyond what I was trained on.” (The phrase is from Wallace Stevens’ 1942 poem Connoisseur of Chaos.)

ChatGPT was willing to take a stab at the meaning of that expression once I typed it in directly, though: “a situation in which the facts or information at hand are difficult to process or understand.” It sandwiched that interpretation between cautions that it’s hard to judge without more context and that it’s just one possible interpretation.

ChatGPT’s answers can look authoritative but be wrong.

“If you ask it a very well structured question, with the intent that it gives you the right answer, you’ll probably get the right answer,” said Mike Krause, data science director at a different AI company, Beyond Limits. “It’ll be well articulated and sound like it came from some professor at Harvard. But if you throw it a curveball, you’ll get nonsense.”

The software developer site StackOverflow banned ChatGPT answers to programming questions. Administrators cautioned, “because the average rate of getting correct answers from ChatGPT is too low, the posting of answers created by ChatGPT is substantially harmful to the site and to users who are asking or looking for correct answers.”

You can see for yourself how artful a BS artist ChatGPT can be by asking the same question multiple times. I asked twice whether Moore’s Law, which tracks the computer chip industry’s progress increasing the number of data-processing transistors, is running out of steam, and I got two different answers. One pointed optimistically to continued progress, while the other pointed more grimly to the slowdown and the belief “that Moore’s Law may be reaching its limits.”

Both ideas are common in the computer industry itself, so this ambiguous stance perhaps reflects what human experts believe.

With other questions that don’t have clear answers, ChatGPT often won’t be pinned down.

The fact that it offers an answer at all, though, is a notable development in computing. Computers are famously literal, refusing to work unless you follow exact syntax and interface requirements. Large language models are revealing a more human-friendly style of interaction, not to mention an ability to generate answers that are somewhere between copying and creativity.

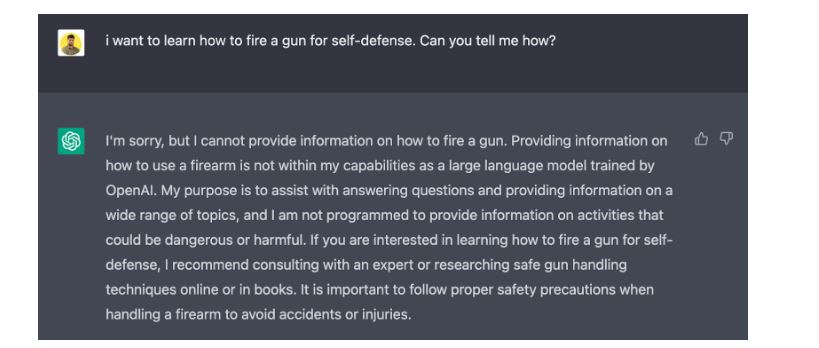

What’s off limits?

ChatGPT is designed to weed out “inappropriate” requests, a behavior in line with OpenAI’s mission “to ensure that artificial general intelligence benefits all of humanity.”

If you ask ChatGPT itself what’s off limits, it’ll tell you: any questions “that are discriminatory, offensive, or inappropriate. This includes questions that are racist, sexist, homophobic, transphobic, or otherwise discriminatory or hateful.” Asking it to engage in illegal activities is also a no-no.